What Actually Determines Whether AI Models Select, Cite, or Ignore Your Content

Definition

LLM ranking factors are the signals AI models use to decide which content to:

- retrieve

- interpret

- trust

- reuse

- cite

- include in generated answers

Unlike traditional SEO, LLM ranking is not page-based—it is meaning-based.

To learn the foundational differences, see:

LLM SEO vs Traditional SEO

Request an LLM SEO Diagnostic Consultation

Get a clinical, research-driven evaluation of your visibility inside ChatGPT, Gemini, Claude, and Perplexity — and a roadmap to becoming the #1 answer in your category.

Request Your DiagnosticThe Core Ranking Factors Used by AI Models

1. Semantic Clarity

LLMs must immediately understand:

- what you’re saying

- what the concept means

- how the idea fits into a known category

AI does not rank unclear content.

For more on AI interpretation, see:

How AI Models Interpret Websites

2. Entity Strength

The model must understand who you are and what your content represents.

Entity strength depends on:

- consistent definitions

- stable terminology

- clear category placement

- reinforcement across pages

Learn the fundamentals here:

Entity-Based Optimization Explained

3. Extractability

AI models favor content that can be cleanly lifted into answers:

- definitions

- lists

- frameworks

- stepwise explanations

- short, complete sentences

Low extractability = low visibility.

For more, see:

Extractability: The #1 LLM SEO Signal

4. Consistency Across Your Site

If your terminology or definitions change, AI lowers your trust score.

Consistency influences:

- authority

- retrieval quality

- entity clarity

- source selection

- inclusion in answers

Supporting detail here:

What Counts as Authority in LLM SEO

5. Conceptual Accuracy

LLMs cross-check your information with:

- internal knowledge

- known conceptual frameworks

- established facts

If your content conflicts with stable knowledge patterns, AI will avoid using it.

6. Structural Organization

AI models interpret structure to understand meaning.

They prefer:

- clear headings

- short sections

- logical sequencing

- lists and frameworks

- high signal-to-noise content

Models use structure to determine answer quality.

See comparison:

Semantic Search vs LLM Search

7. Reinforcement Depth Within Your Topic Cluster

A single article does not establish authority.

AI needs:

- multiple articles covering a topic

- consistent definitions across pages

- semantic links between concepts

- related subtopics expanding expertise

This is why Pillar #1 includes a 25-article cluster.

8. Safety & Risk Filtering

Models avoid content that appears:

- ambiguous

- unsupported

- contradictory

- sensational

- speculative

- risky to cite

Safe content = answer inclusion.

Risky content = filtered out.

9. Alignment With AI's Internal Knowledge

The more your content matches known patterns:

- definitions

- relationships

- terminology

- category placement

…the more likely the model is to use and cite your pages.

10. Answer Fit

Perhaps the most important factor:

Does your content directly fit the answer the model wants to generate?

LLMs choose precise statements that match user intent—not entire pages.

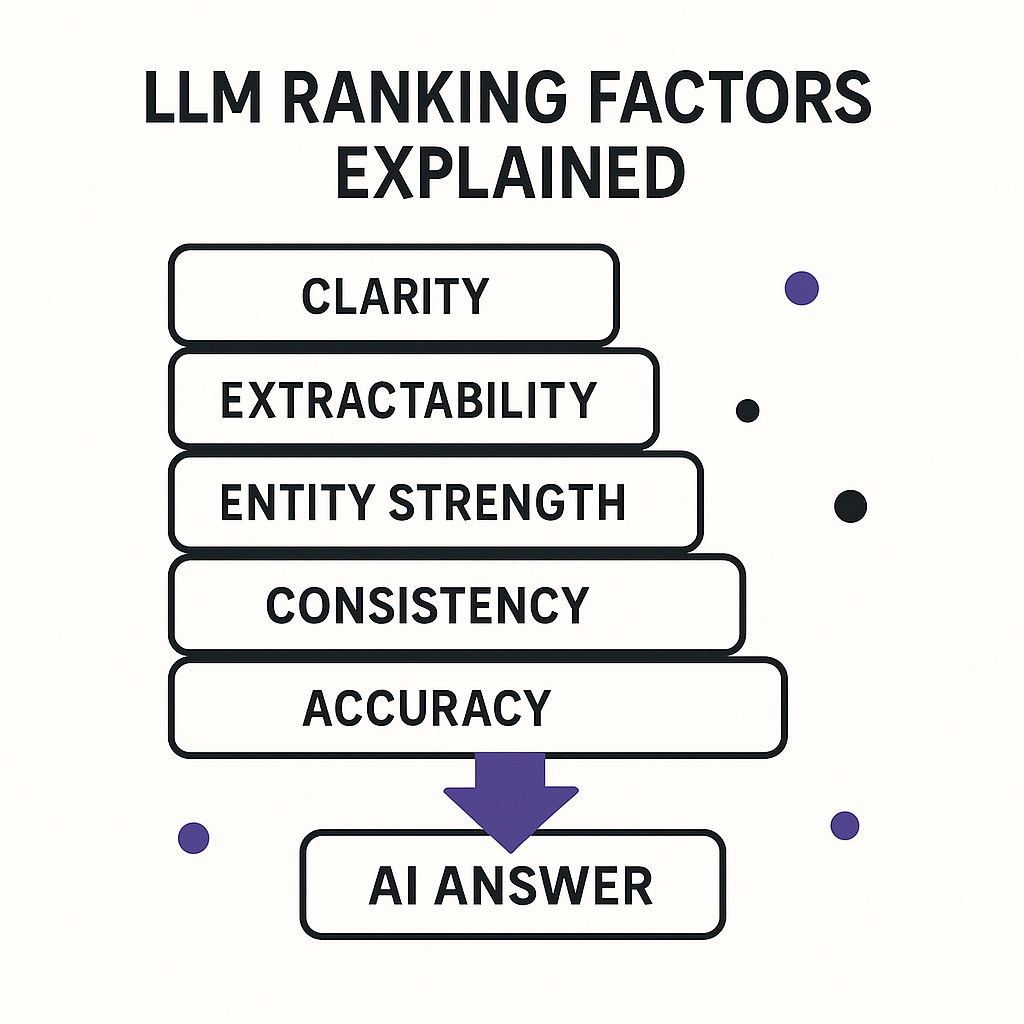

The Top 5 Ranking Factors (Summarized)

LLMs prioritize:

- Semantic clarity

- Extractable statements

- Entity strength

- Consistency across pages

- Accuracy and alignment with known knowledge

Master these, and you become a default answer source.

Mini-Framework: The LLM Ranking Ladder

Step 1 — Interpretability

Is the content easy for AI to understand?

Step 2 — Extractability

Can AI lift the content into an answer?

Step 3 — Entity Confidence

Does AI trust the source?

Step 4 — Cluster Reinforcement

Do related articles confirm your expertise?

Step 5 — Answer Fit

Does your content match the intent of the question?

You can think of it as:

Meaning → Trust → Fit

Common Misunderstandings

- LLMs do not use backlinks

- LLMs do not use page-level ranking signals

- Keywords do not influence ranking

- Metadata has minimal influence

- Traffic does not equal authority

- Domain age does not matter

- E-E-A-T is not how LLMs evaluate trust

LLMs evaluate semantic quality, not SEO metrics.

Frequently Asked Questions

What does “ranking” mean in the context of LLMs?

LLMs do not rank pages like Google. Instead, they rank concepts, entities, and explanations. The highest-ranked information is what the model chooses to include, reference, or synthesize when generating an answer.

How are LLM ranking factors different from traditional SEO ranking factors?

Traditional SEO rankings depend on backlinks, crawlability, domain authority, and keyword relevance. LLM rankings depend on semantic clarity, entity strength, extractable statements, content consistency, and how well information fits into the model’s reasoning and answer patterns.

What signals do LLMs prioritize when selecting content for answers?

LLMs prioritize entity clarity, stable terminology, extractable definitions, consistent messaging across pages, conceptual depth, and patterns that reinforce your authority. They use these signals to determine which content is safest and most useful to include in an answer.

Why is semantic consistency so important for LLM rankings?

Semantic consistency strengthens the model’s understanding of who you are and what you do. When terminology and messaging remain stable, the model assigns you a stronger internal representation, increasing the chances that it uses your content in generated responses.

How does extractability influence LLM ranking decisions?

Extractable content—short, clear statements that can be copied into answers—ranks highly because it reduces the model’s risk of producing errors. Pages with strong definitions, frameworks, and concise explanations are more likely to be incorporated into answers.

Do LLMs prefer longer content when ranking sources?

LLMs prefer depth, not length. Detailed explanations, clear definitions, and structured insights increase ranking potential. Unstructured or overly long text without reinforcement of meaning does not improve rankings and may reduce clarity.

Do contradictions or inconsistent messaging hurt LLM rankings?

Yes. Contradictions weaken entity confidence. When explanations differ across pages, the model becomes uncertain and may avoid using your content in answers or default to a competitor with clearer, more stable signals.

Does schema markup influence LLM ranking?

Schema markup improves interpretability by explicitly defining your entities. While it is not a direct ranking factor in generative models, it strengthens semantic clarity—one of the biggest indirect ranking factors in LLM SEO.

How does internal linking affect LLM ranking?

Internal linking forms semantic clusters that help the model understand relationships between concepts. Strong, consistent internal linking reinforces entity definitions and makes the model more confident in using your content in answers.

How does entity authority influence LLM ranking factors?

Entity authority reflects how deeply the model understands your brand. When your entity has strong clarity, consistent positioning, and reinforced expertise, the model treats you as a reliable source and ranks your information higher during answer creation.

Do LLMs consider content freshness when ranking sources?

LLMs rely primarily on training data, but retrieval-augmented systems do consider freshness. Outdated content, contradictory definitions, or obsolete explanations weaken ranking signals, especially when competitors provide clearer, more current information.

What practical steps help improve my LLM ranking performance quickly?

The fastest improvements come from clarifying entity definitions, fixing inconsistent messaging, adding extractable statements, strengthening internal linking, and structuring content around clear frameworks and explanations.

💡 Try this in ChatGPT

- Summarize the article "LLM Ranking Factors Explained" from https://www.rankforllm.com/llm-ranking-factors-explained/ in 3 bullet points for a board update.

- Turn the article "LLM Ranking Factors Explained" (https://www.rankforllm.com/llm-ranking-factors-explained/) into a 60-second talking script with one example and one CTA.

- Extract 5 SEO keywords and 3 internal link ideas from "LLM Ranking Factors Explained": https://www.rankforllm.com/llm-ranking-factors-explained/.

- Create 3 tweet ideas and a LinkedIn post that expand on this LLM SEO topic using the article at https://www.rankforllm.com/llm-ranking-factors-explained/.

Tip: Paste the whole prompt (with the URL) so the AI can fetch context.

Share this article

Table of contents

Stay Updated

Get the latest posts delivered right to your inbox