The Real Criteria AI Models Use to Determine What Content Is Trustworthy, Useful, and Answer-Ready

Definition

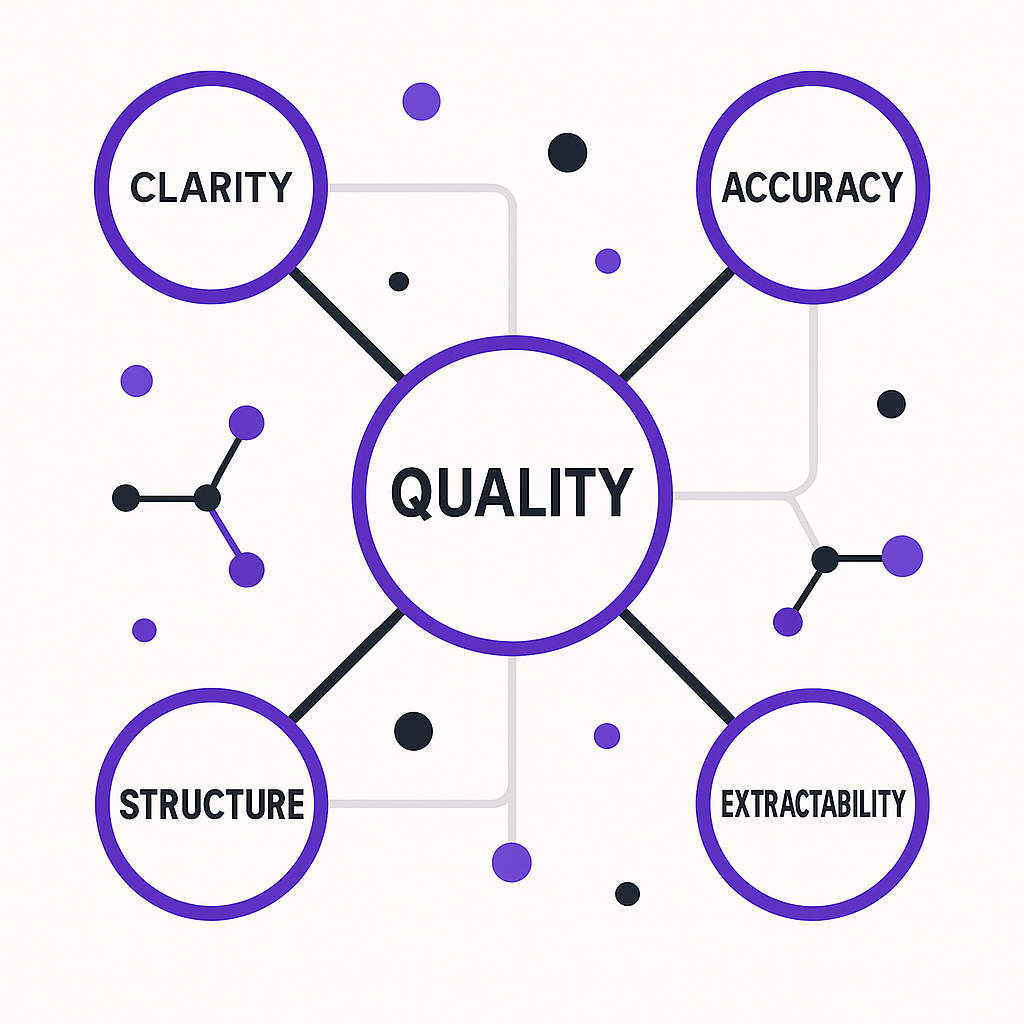

Content quality in LLM SEO refers to how easily an AI model can interpret, classify, and use your content in generated answers. Quality is based on:

- semantic clarity

- conceptual accuracy

- structure

- extractability

- consistency

- safety

Traditional SEO metrics do not influence LLM decisions.

For comparison, see:

LLM SEO vs Traditional SEO

Request an LLM SEO Diagnostic Consultation

Get a clinical, research-driven evaluation of your visibility inside ChatGPT, Gemini, Claude, and Perplexity — and a roadmap to becoming the #1 answer in your category.

Request Your DiagnosticHow LLMs Actually Measure Content Quality

1. Clarity of Meaning

AI models prefer content that:

- defines concepts cleanly

- avoids vague language

- uses simple, declarative statements

- expresses one idea per sentence

Clarity is the #1 content-quality factor in LLM search.

To understand how AI interprets meaning, see:

How AI Models Interpret Websites

2. Accuracy & Alignment With Known Knowledge

LLMs cross-check your statements against:

- internal knowledge

- external reference structures

- known conceptual patterns

If your content contradicts established knowledge, it will be excluded from answers.

3. Extractability

Extractability = how easily AI can lift text into an answer.

High-extractability content includes:

- definitions

- lists

- bullet points

- frameworks

- stepwise explanations

- unambiguous sentences

Low extractability = low visibility.

Learn more here:

Extractability: The #1 LLM SEO Signal

4. Structure & Organization

LLMs prefer content that is:

- well-organized

- divided with clear headings

- written in logical order

- supported with examples and frameworks

Structure enhances interpretability.

To see how structure affects meaning, read:

Semantic Search vs LLM Search

5. Consistency Across Pages

Models evaluate whether your explanations match across your site.

Terminology stability is crucial.

Inconsistent terms weaken:

- authority

- classification

- entity clarity

For deeper detail, see:

Entity-Based Optimization Explained

6. Safety & Risk Avoidance

LLMs avoid content that appears:

- speculative

- contradictory

- vague

- incomplete

- risky to quote

- misaligned with internal knowledge

If content introduces uncertainty, it will not be selected.

7. Depth & Breadth of Topic Coverage

High-quality content demonstrates:

- full understanding of a topic cluster

- coverage of supporting concepts

- consistent reinforcement

This is why Pillar #1 includes 25 supporting articles.

Internal topical consistency strengthens authority.

Learn more here:

What Counts as Authority in LLM SEO

Key Components of High-Quality Content for LLMs

1. Semantic clarity

Simple, precise, definable.

2. Logical structure

Headings, lists, definitions.

3. Accurate information

Aligned with known patterns.

4. Consistent terminology

Stable across all pages.

5. Extractable statements

Liftable by AI without editing.

6. Reinforcement across pages

Clustered and interconnected.

7. Safe and reliable

Low-risk for the model to cite.

Common Misunderstandings About Content Quality

- Keywords do not influence LLM content quality

- Backlinks do not increase authority

- Long content is not automatically better

- Metadata is rarely used by AI models

- Updating content does not instantly update AI models

- AI search quality ≠ Google search quality

LLM content quality is about meaning, not metadata.

Mini-Framework: The LLM Content Quality Model

1. Clarity

Is the meaning immediately understandable?

2. Structure

Is the content organized with defined sections?

3. Extractability

Can AI reuse your content cleanly?

4. Accuracy

Does your content reinforce known knowledge?

5. Consistency

Does your message remain stable across your entire cluster?

Mastering these five ensures your content becomes answer material.

Frequently Asked Questions

How do LLMs define “content quality”?

LLMs define quality based on clarity, coherence, completeness, and usefulness. High-quality content clearly explains concepts, stays consistent across pages, and contains extractable statements that can be reused safely inside AI-generated answers.

How is content quality for LLMs different from traditional SEO quality signals?

Traditional SEO evaluates quality through backlinks, user engagement, E-E-A-T, and technical factors. LLMs evaluate meaning: entity clarity, semantic consistency, precision of explanations, and how well content fits into generated answers. They do not use link-based authority metrics.

Why do extractable statements matter so much to LLM content scoring?

Extractable statements are short, self-contained sentences that define concepts clearly. Because they can be copied into answers without distortion, LLMs treat them as high-quality signals and prioritize sources that provide them consistently.

What patterns do LLMs analyze to judge content quality?

Models look for clear definitions, structured explanations, examples, stable terminology, repeated reinforcement across pages, and consistent relationships between ideas. These patterns help the model create a reliable internal representation of your expertise.

Do contradictions on my website affect how LLMs judge quality?

Yes. Contradictory statements weaken the model’s confidence in your content. When definitions or explanations change from page to page, LLMs treat your site as less reliable and may avoid using it in generated answers.

Does deeper, more detailed content score higher with LLMs?

Yes, but only when depth increases clarity rather than noise. LLMs reward content that offers precise definitions, structured explanations, frameworks, and examples. Long content without structure does not help and may reduce clarity.

Do LLMs care about readability or grade level?

LLMs favor content that is easy to parse. Short sentences, clear transitions, and stable terminology improve readability and therefore increase quality scoring. High-grade-level writing can still score well if the definitions and relationships are crystal clear.

Do LLMs check whether content is factually correct?

LLMs evaluate factual consistency based on internal training data and retrieval sources. While they cannot fully verify truth, they downgrade content that contradicts widely accepted knowledge unless it provides strong, clear reasoning and definitions.

Does schema markup improve content quality for LLMs?

Schema markup boosts perceived quality by making the meaning of your content explicit. It clarifies entity types, relationships, and topical relevance, helping LLMs interpret your content more accurately and with higher confidence.

Does outdated content hurt quality scores in LLMs?

Yes. Outdated or contradictory information reduces the model’s trust in your expertise. Updating content to reflect current definitions and removing obsolete sections helps strengthen your authority and improves quality interpretation.

What content structures do LLMs interpret as high quality?

LLMs prefer content with clear headings, ordered lists, definitions, examples, frameworks, FAQs, and repeated reinforcement of key concepts. These structures make your content easier to chunk, extract, and reuse inside answers.

What is the fastest way to improve how LLMs evaluate my content quality?

The fastest improvements come from standardizing terminology, adding extractable definitions, cleaning up contradictions, strengthening entity descriptions, and rewriting long paragraphs into structured, reusable statements.

💡 Try this in ChatGPT

- Summarize the article "How LLMs Evaluate Content Quality" from https://www.rankforllm.com/how-llms-evaluate-content-quality/ in 3 bullet points for a board update.

- Turn the article "How LLMs Evaluate Content Quality" (https://www.rankforllm.com/how-llms-evaluate-content-quality/) into a 60-second talking script with one example and one CTA.

- Extract 5 SEO keywords and 3 internal link ideas from "How LLMs Evaluate Content Quality": https://www.rankforllm.com/how-llms-evaluate-content-quality/.

- Create 3 tweet ideas and a LinkedIn post that expand on this LLM SEO topic using the article at https://www.rankforllm.com/how-llms-evaluate-content-quality/.

Tip: Paste the whole prompt (with the URL) so the AI can fetch context.

Share this article

Table of contents

Stay Updated

Get the latest posts delivered right to your inbox