How AI Models Store, Organize, and Reason Over Information to Generate Accurate Answers

Definition

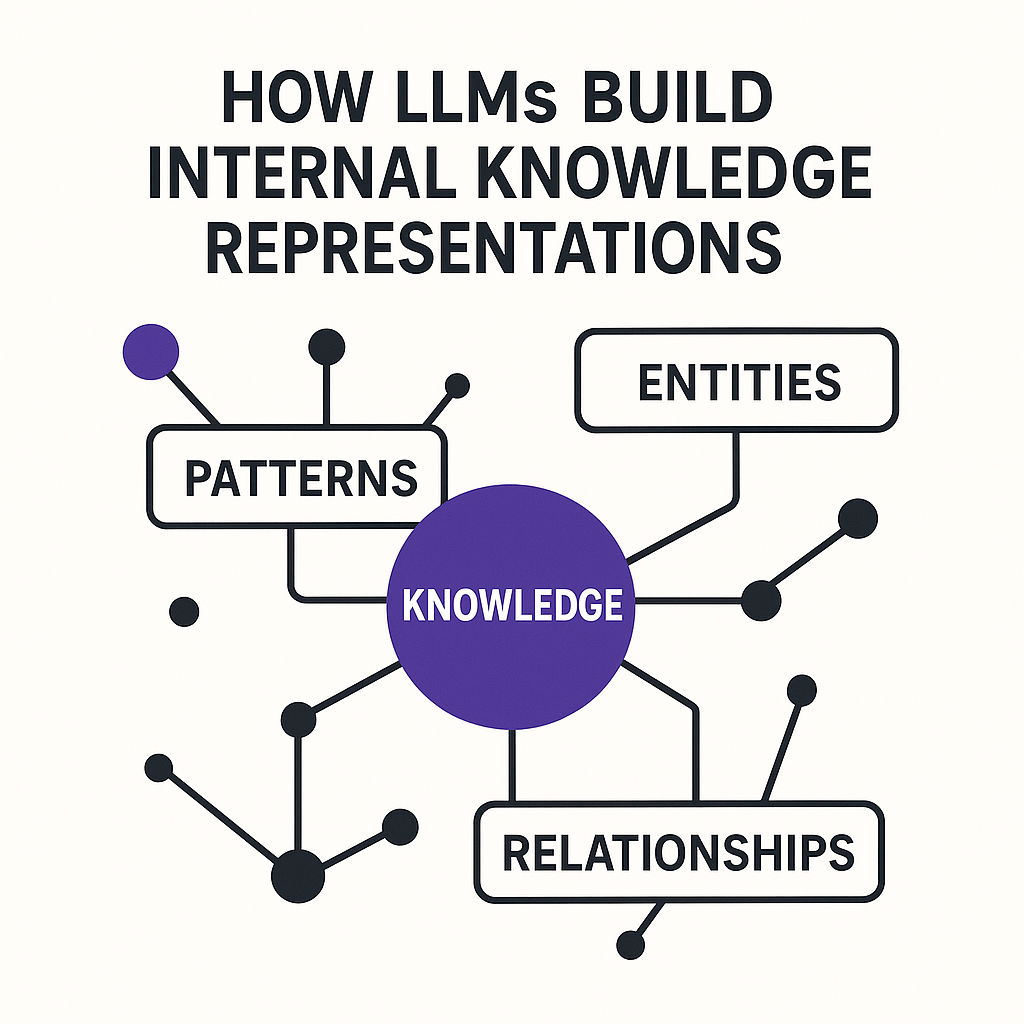

Internal knowledge representation refers to the way large language models store and organize information inside the model’s parameters.

LLMs do not store facts as files or documents.

They store patterns, entities, relationships, and statistical weights that reflect how concepts connect.

This internal structure determines:

- what an LLM “knows”

- how it interprets websites

- what it retrieves

- what it selects for answers

For foundational context, see:

How AI Models Interpret Websites

Request an LLM SEO Diagnostic Consultation

Get a clinical, research-driven evaluation of your visibility inside ChatGPT, Gemini, Claude, and Perplexity — and a roadmap to becoming the #1 answer in your category.

Request Your DiagnosticHow LLMs Actually Build Knowledge Inside the Model

1. Pattern Learning (Embeddings & Associations)

LLMs learn through examples — billions of sentences that reveal patterns such as:

- concepts

- definitions

- roles

- categories

- cause-and-effect relationships

These patterns become dense embeddings inside the model.

2. Entity Formation (Concept Stabilization)

Over time, repeated exposure forms entities:

- business types

- processes

- products

- industries

- roles

- frameworks

Entities become stable when they appear consistently across multiple contexts.

Learn how entities affect rankings:

Entity-Based Optimization Explained

3. Relationship Mapping (Semantic Graph Formation)

LLMs build knowledge by identifying how concepts relate:

- X is a type of Y

- X does Y

- X requires Y

- X depends on Y

Models use these relationships to reason and generate structured answers.

See also:

Semantic Search vs LLM Search

4. Concept Compression (Parameter Encoding)

Knowledge is compressed into the model’s weights:

- not documents

- not web pages

- not exact sentences

Instead, the model stores representations of meaning that allow it to generalize.

5. Reinforcement Through Repetition

If the model sees a concept expressed consistently across sources:

- confidence increases

- representations strengthen

- the entity becomes easier to retrieve

This is why your website needs consistent, reinforced definitions across multiple pages.

Learn more:

What Counts as Authority in LLM SEO

6. Conflict Resolution (Consensus Prioritization)

When information sources disagree, models prefer:

- the most consistent explanations

- the most common patterns

- the safest formulations

- the clearest entities

You can influence this by providing stable definitions and unambiguous explanations.

See:

How LLMs Handle Conflicting Information

7. Updating Knowledge Through Retrieval

LLMs extend internal knowledge by retrieving:

- fresh data

- structured pages

- updated definitions

- authoritative explanations

Retrieved content is blended with internal representations during answer generation.

To understand retrieval behavior, read:

LLM Ranking Factors Explained

What LLMs DO NOT Do

LLMs do not:

- store documents

- index pages

- use keywords

- keep a database of sources

- retrieve your entire website

- remember updates instantly

They rely on patterns and meaning, not files or URLs.

Why This Matters for LLM SEO

Your visibility depends on how well your content fits into the model’s internal knowledge structure.

This means your site must:

- define entities clearly

- reinforce meanings across pages

- avoid contradictions

- use consistent terminology

- provide extractable statements

- match known patterns

Your goal is to “train” the model to understand you as a stable, trustworthy entity.

Mini-Framework: The Knowledge Representation Stack

1. Patterns

Raw statistical associations

2. Entities

Stable concepts the model understands

3. Relationships

How entities connect semantically

4. Definitions

Extractable conceptual anchors

5. Reinforcement

Cross-page repetition strengthens meaning

6. Retrieval Integration

External content updates internal reasoning paths

Common Misunderstandings

- LLMs do not store websites

- Updating your site does not update the model

- LLMs do not retrieve your entire domain

- Models do not memorize pages

- Keywords do not influence internal knowledge

- Backlinks do not build LLM authority

Everything depends on clarity, stability, reinforcement, and meaning.

Frequently Asked Questions

How do LLMs build knowledge in the first place?

LLMs build knowledge by learning patterns from massive datasets. They do not store facts like a database. Instead, they learn the probability of how ideas, words, and concepts relate, allowing them to reconstruct information when needed.

What’s the difference between LLM training and real-time answering?

Training builds the model’s long-term internal knowledge using historical data. Inference—when the model generates answers—uses that internal knowledge plus any retrieved information. Most answers blend both sources.

Do LLMs store facts, or do they store something else?

LLMs store patterns, not facts. They compress concepts into high-dimensional vectors that capture relationships. When asked a question, they reconstruct likely answers based on these patterns rather than retrieving a stored statement.

How do entities help LLMs build stable knowledge?

Entities act as anchor points inside the model’s internal space. When your brand, product, or concept is clearly defined across multiple sources, the model builds a stronger and more stable representation, increasing accuracy and visibility in answers.

Is an LLM’s “knowledge” the same as a traditional knowledge graph?

No. A knowledge graph stores explicit relationships like a database. An LLM stores implicit relationships inside its weights. It infers connections rather than referencing a structured graph unless a retrieval or hybrid system is used.

Why do clear definitions strengthen an LLM’s understanding of my brand?

Clear, repeated definitions give the model strong semantic patterns to learn from. When the same entity description appears across your site, articles, and schema markup, the model forms a more accurate representation of who you are and what you do.

Do LLMs instantly update their knowledge when my website changes?

No. Internal model knowledge updates only when the model is retrained or fine-tuned. Retrieval-augmented systems may pull in recent updates, but stable understanding requires repeated signals over time.

How does retrieval influence the knowledge an LLM uses in answers?

Retrieval allows the model to temporarily supplement its internal knowledge with external documents. The model still decides which pieces to use, but retrieval increases accuracy and freshness when answering time-sensitive or domain-specific questions.

Do contradictions in my content affect how LLMs build knowledge?

Yes. Contradictions weaken the model’s internal representation of your entity. If your messaging changes across pages, the model becomes uncertain and may avoid using your content in answers or misrepresent your brand.

Why can’t LLMs remember everything they’ve been trained on?

LLMs compress trillions of tokens into a finite number of parameters. This forces trade-offs: they keep patterns that appear frequently and discard noise or low-signal details. Strong, repeated definitions make it through compression—weak signals do not.

How can I improve the way LLMs understand my brand or content?

You can improve model understanding by clarifying key entity definitions, using stable terminology, creating extractable explanations, reinforcing concepts across multiple pages, and adding structured data that explicitly defines your entities.

Do LLMs truly “know” things or do they just infer patterns?

LLMs do not “know” facts the way humans do. They infer likely patterns of meaning based on their training. Their knowledge is probabilistic, not literal—making clarity, consistency, and reinforcement essential for accurate outputs.

Do external sources influence how LLMs build knowledge about my brand?

Yes. Mentions across articles, directories, schema markup, and social profiles help reinforce your entity. When multiple sources repeat the same definitions, the model builds a more confident and stable internal representation of your brand.

💡 Try this in ChatGPT

- Summarize the article "How LLMs Build Internal Knowledge Representations" from https://www.rankforllm.com/how-llms-build-knowledge/ in 3 bullet points for a board update.

- Turn the article "How LLMs Build Internal Knowledge Representations" (https://www.rankforllm.com/how-llms-build-knowledge/) into a 60-second talking script with one example and one CTA.

- Extract 5 SEO keywords and 3 internal link ideas from "How LLMs Build Internal Knowledge Representations": https://www.rankforllm.com/how-llms-build-knowledge/.

- Create 3 tweet ideas and a LinkedIn post that expand on this LLM SEO topic using the article at https://www.rankforllm.com/how-llms-build-knowledge/.

Tip: Paste the whole prompt (with the URL) so the AI can fetch context.

Share this article

Table of contents

Stay Updated

Get the latest posts delivered right to your inbox